Five percent of the world’s population suffers from disabling hearing loss, which includes both the Deaf and hard of hearing. While everyone should be aware of the things that are happening in their home, that isn’t the case for this population. This lack of situational awareness means that when you’re deaf, if you don’t see it—it’s as if it never happened. Hearing is easy to take for granted, but not being able to access sounds can be disorienting, dangerous, and could mean life or death for the millions of people living with disabling hearing loss.

Current accessibility products are extremely limited. Assistive hearing devices—like cochlear implants—help indicate or amplify sound, but don’t assign meaning to what’s heard. Other visual cues help notify of a doorbell or alarm, but are only single use.

The Deaf and hard of hearing simply don’t have anything that can help them distinguish a microwave from a crying baby, from glass breaking, and so on—let alone anything that can do so with any level of confidence.

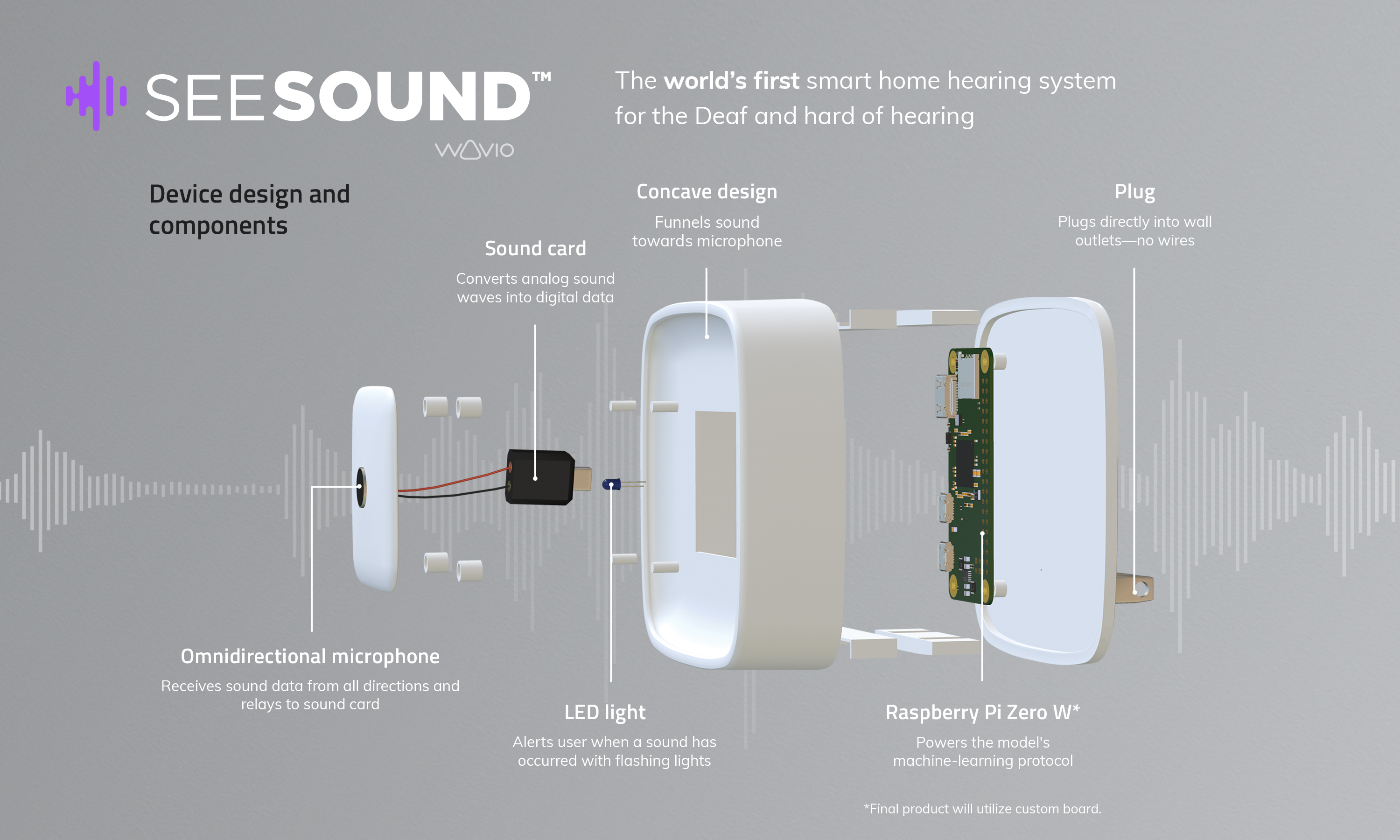

Recognizing this unmet need, we invented See Sound—the world’s first smart home hearing system. Simply plug the See Sound unit(s) directly into an outlet and connect to the app on your phone. When a sound occurs, the closest See Sound interprets it and makes a prediction based on its confidence level, alerting users on their smart devices.

In a time when sound recognition technology is constantly advancing, the availability of smart devices for the Deaf and hard of hearing is severely lacking. The problem with smart product accessibility is two-fold. First, smart home products that rely on traditional modalities are auditory-based and voice-controlled, meaning they are virtually useless to those who don’t communicate through a spoken language. In order to appeal to a Deaf consumer base, the interface would need to be redesigned, changing from auditory to visual. Second, and chief among the obstacles to solving situational awareness, has been the shortage of data. It would take literally millions and millions of sound samples to create a machine-learning model that could report with any level of accuracy.

In order for any product to be effective in this space, it needed to tackle both issues. And that’s exactly what we did with See Sound, finally finding a way to give the Deaf and hard of hearing access to sounds in a visual way.

To address the problems with the user interface, we transformed the auditory-based structures into text-based systems. When a sound occurs in the home, the closest See Sound device interprets it and makes a prediction based on its confidence level, sending users notifications in real-time directly to their phones and other smart devices.

The story of the data; however, is the true innovation behind See Sound. To overcome this massive technological barrier, we found our answer in an unlikely place: YouTube. YouTube contains over a billion hours of videos that are filled with sounds. We leveraged a data set of over 2 million human-labeled 10-second sound clips sourced from these billions of user videos. Each were manually analyzed, annotated, and organized into the Google Audio Set. Every sound in our data model is composed of data from several thousand YouTube audio samples—all adding up to a massive library of data that is accessed by our device and visualized for users. Our machine-learning model was then trained with these data to achieve an incredibly high accuracy level (mean average precision of 0.360), outperforming the state-of-the-art single-level attention model of 0.327 and Google baseline of 0.314.

With the help of YouTube, See Sound is poised to drastically change the way Deaf people interact at home, and empower them to lead more independent lives. Thanks to viral sensations, like Chewbacca Mom and Dramatic Chipmunk, the Deaf and hard of hearing can finally see sound.

After investing $160,000+ over the last 5 years, we’re ready to launch See Sound worldwide. We expect to see our first run and distribution of 5,000 units by 4th fiscal quarter in 2020. In tandem, we are petitioning local, state, and federal US governments to introduce this product under the Americans with Disabilities Act’s (ADA) scope of accessible technology, which would allow for the government to cover the cost of the product. With multiple patents, the intellectual property is fully protected. Our patents cover any hardware and software that recognizes sound and notifies users through their smart devices.

The path to market is clear. We have spoken to numerous nationally recognized organizations and received their endorsements and letters of intent. These connections into the Deaf community and strong advocacy relationships have laid a strong foundation that will allow us to reach a significant portion of the Deaf community at launch. We have already met with Amazon, and they want this product to be available on their platform as soon as it is commercially available.

For the underserved Deaf community, See Sound is a life-changing accessibility tool, but it’s also a significant advancement in sound recognition technology that can benefit a far-reaching population of customers, including the elderly and military veterans. The Deaf and hard of hearing don’t simply want this product, they need it. No longer do people need to rely on their partners or pets to alert them to noises around their home. Everyone can see sound.